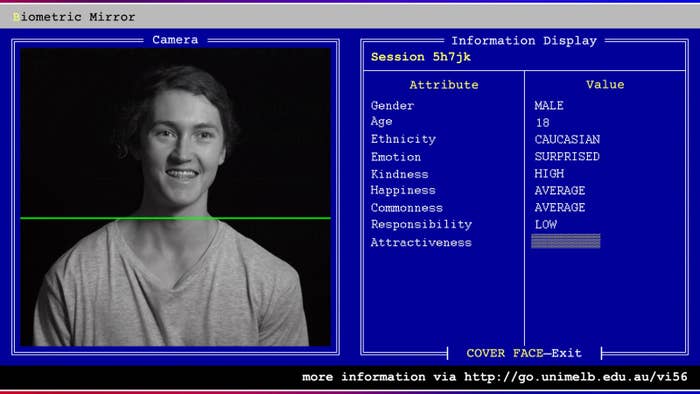

Melbourne University researchers have designed an artificially intelligent (AI) face analysis system that will take a photo of your face and then judge you on a number of attributes including your age, attractiveness, ethnicity, and aggression levels.

The system is also really bad at making these judgements.

The AI is called the Biometric Mirror and was designed by computing and information systems researchers from the University of Melbourne in collaboration with Science Gallery Melbourne.

The project was designed to expose flaws in government use of AI facial recognition, particularly when the information that directs those programs is not transparent to the public.

The information that feeds Biometric Mirror's facial analysis is an open-source database of 10,000 people with photographs of their faces and how they are perceived by others.

The rating that a face receives from the AI on any particular trait is directed by how similar it is to other faces on the database that have been rated for that trait.

For example, a person is rated as hotter when their face is similar to people that the database shows are attractive.

Biometric Mirror is a purposefully flawed system because the database that directs its judgements is a small database of relatively unvaried people (85% of the faces are Caucasian, for example).

Lead researcher Dr Niels Wouters, a digital media specialist, told BuzzFeed News that the problems with AI don't stop with its ethnicity judgements.

"On another level, in terms of gender, our dataset is binary so it only identifies 'female' and 'male' ... so that's another example of where the inclusiveness is a major issue."

Wouters and his team designed the AI around this incomplete and untrustworthy database to prove a point to governments — that facial recognition AI is only as reliable as the information feeding its algorithm.

"We embrace the flawed nature of the system because that's its true quality and that's also the point...but the problem is that with commercial systems used by governments we're not certain about what they're based on," said Wouters.

A bill to allow the storing and sharing of facial identity information for identification such as driving licences were introduced to parliament earlier this year.

The bill proposes a National Driver Licence Facial Recognition Solution (NDLFRS) that will act as a hub for all states and territories and hold facial images for comparison in a database.

Wouters is concerned that these types of databases could have far-reaching, unintended consequences.

"For instance, what if an AI thinks someone looks like a terrorist and there are consequences connected to that identification?" he said. "All of a sudden you'd end up on a black list."

Wouters also believes that the low "confidence level" of facial recognition systems can make their application at a national level concerning.

For example, Biometric Mirror only achieves a confidence level (the amount of certainty that its results are accurate) of 5 or 10% for its judgements because of its small database, and it will make different judgements about a person depending on environmental factors such as time of day.

"There is at this moment no fully inclusive data set available for AI to be developed around," said Wouters.

Biometric Mirror is currently installed for public visits at the University of Melbourne and Wouters says that the team will be writing both academic reports and articles with their findings "in light of the urgency" of proposed facial recognition use in Australia.