A new release of data by Twitter and a huge study of browser and search engine data by Microsoft Research gives the clearest view yet of the strategies employed by Russia’s online trolls to sow discord in US politics.

Most Russian trolls made little headway, but the star performers scored some big hits. Sometimes they beat the mainstream news media to coverage of divisive events. On other occasions, their tweets were embedded in widely read news stories. And in March 2017, one story from a fake black identity news site operated by the Kremlin got a huge traffic surge thanks to a high ranking in Microsoft’s Bing search engine.

It all demonstrates “the ease with which malicious actors can harness social media and search engines for propaganda campaigns,” the Microsoft study found.

But it’s still unclear whether the Kremlin’s online content has changed Americans’ behavior in a meaningful way. The Microsoft researchers could find no evidence that reading Russian posts increased political donations, prompted people to attend demonstrations, or even altered their overall consumption of online news.

The study also suggests that the Internet Research Agency, which runs the Kremlin’s online disinformation efforts from its headquarters in St Petersburg, had to work harder to engage Americans with content designed to appeal to partisans on the left. The Microsoft Research team found that purchased Facebook ads were crucial for driving traffic spikes for the Internet Research Agency’s left-wing content, whereas right-wing posts that took off went viral without this assistance.

A BuzzFeed News analysis of Russian troll tweets before and after the 2016 presidential election shows that they became more active, and got more traction, after Donald Trump’s victory. In the year before the election, right-wing troll accounts amassed a total of 3.5 million retweets, averaging 23 per tweet. Their counterparts on the left managed 2.2 million retweets, averaging just 12 per tweet. In the year after, right-wing trolls still led the overall retweet count, scoring 7.5 million retweets to the left-wing trolls’ 5.2 million. But the left-wing trolls were getting an average of 21 retweets per tweet to the right wing trolls’ 14.

The trolls’ success after the election presumably suits Vladimir Putin just fine, given the Internet Research Agency’s “strategic goal to sow discord,” as described in a February 2018 indictment of 13 Russian operatives for online interference in US politics from special counsel Robert Mueller.

On Oct. 16, Twitter released a trove of data on accounts it had flagged as Russian trolls and shut down. Data on most of the 3.26 million English-language tweets from these accounts was released in July by researchers Darren Linvill and Patrick Warren of Clemson University in South Carolina. But the Clemson data lacked information on engagement with each tweet, now provided by Twitter.

BuzzFeed News looked at retweet counts and found that the most viral tweets were posted by accounts that Linvill and Warren called Left Trolls, and which pretended to be liberal black Americans tweeting about black identity issues.

The most retweeted tweet from a Trump-supporting "Right Troll" account was ranked 46th on the list.

While the Left Trolls dominated the top few viral tweets, overall the Right Trolls did better in getting their content shared. Most of the trolls’ tweets had little impact: Just 79 retweets was enough to make the top 1%. But Right Trolls scored 19,502 of these tweets, compared to 12,869 from the Left Trolls.

Much of the retweeting of viral tweets may have been by bots of various kinds. But according to Twitter, the retweet counts exclude retweets by banned accounts — so the numbers don’t include the known trolls retweeting one another. What’s more, the BuzzFeed News analysis also revealed that less than 138,000 of the 3.26 million tweets by the Russian trolls were retweets of other known Kremlin trolls.

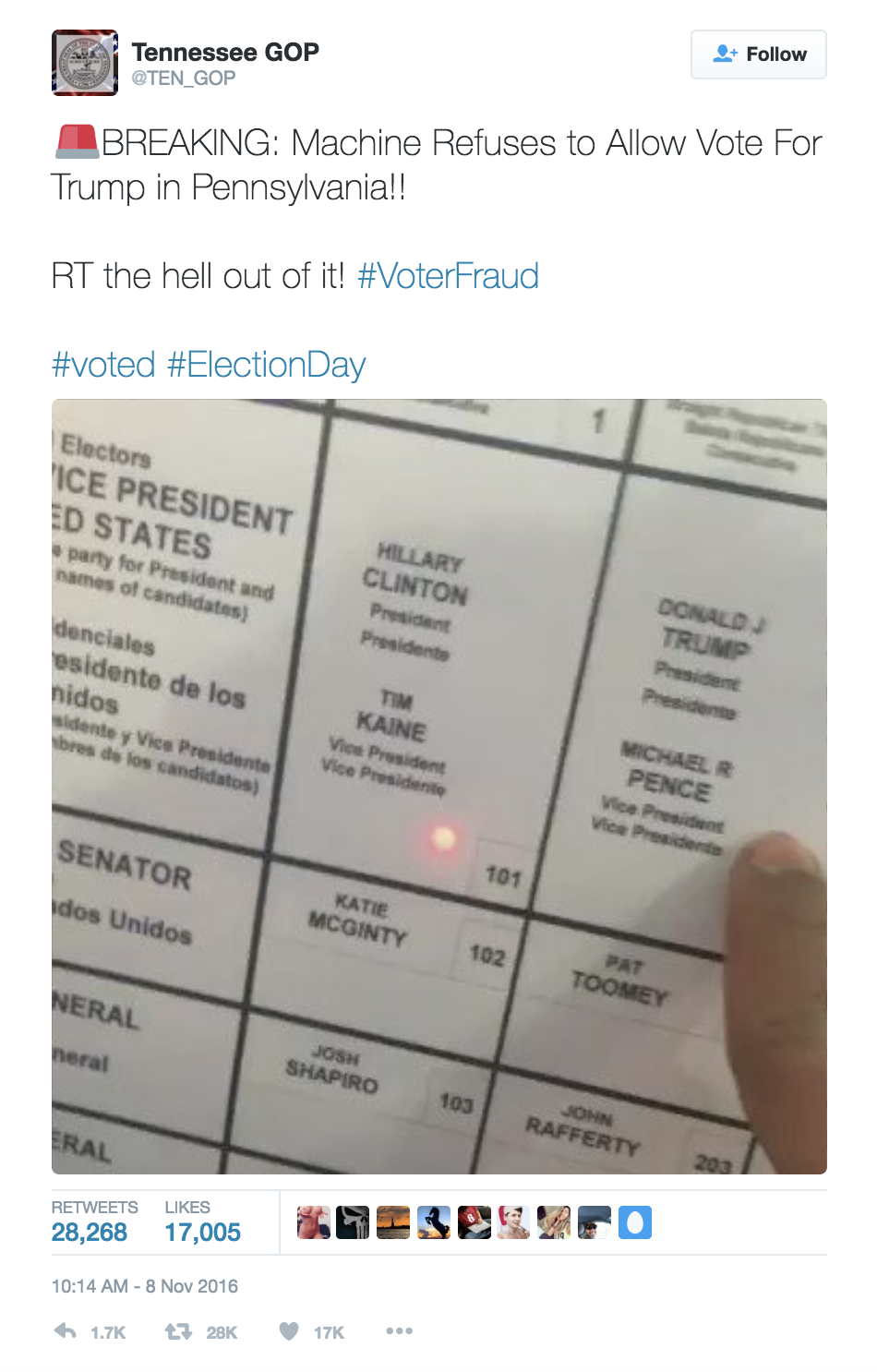

The @TEN_GOP account, billed as the “Unofficial Twitter of Tennessee Republicans” and including the #MAGA hashtag in its profile description, was the standout performer in the Kremlin’s troll army.

From November 2015 until it tweeted its last in August 2017, @TEN_GOP gained nearly 150,000 followers, tweeted more than 10,000 times, and amassed almost 6 million retweets — more than a quarter of all retweets accumulated by the Kremlin’s English-language trolls. On average, each of @TEN_GOP’s tweets was retweeted 571 times.

The Internet Research Agency seemed worried that it would lose @TEN_GOP. @ELEVEN_GOP, also set up in November 2015, stated in its profile: “This is our back-up account in case anything happens to @TEN_GOP.” And on the day of @TEN_GOP’s demise, Aug. 24, 2017, @realTEN_GOP was created.

Retweets are only one measure of success on Twitter. Through data gathered by Microsoft’s Edge and Internet Explorer browsers, Alexander Spangher and his colleagues at Microsoft Research calculated traffic to Russian Twitter trolls from Jan. 1 to Aug. 1, 2017. (This didn’t register simple retweets and likes, but counted users actively clicking on a tweet or Twitter profile, or clicking on a post in which a tweet was embedded.)

Their analysis confirmed the dominance of a few highly successful accounts. @TEN_GOP was again the juggernaut, accounting for 46.2% of all clicks on Russian troll tweets and Twitter profiles. Its nearest Left Troll rival, @Crystal1Johnson, which posed as a black woman from Richmond, Virginia, managed just 7.6% of the total clicks.

The Microsoft Research team’s traffic analysis also revealed how the troll accounts leveraged breaking news events. On occasions, news outlets were duped into including Russian troll tweets in their coverage — such as a story at MSN News reporting on claims that a Muslim woman ignored victims of the March 22, 2017, terror attack outside the UK parliament, wandering past while looking at her phone. It quoted and linked to a tweet from the Right Troll account @SouthLoneStar that included the hashtag #BanIslam.

@TEN_GOP’s biggest traffic spike was for a June 30, 2017, tweet about the prior criminal record of Henry Bello, a Nigerian-born doctor who on that day opened fire with a semi-automatic rifle at the Bronx-Lebanon Hospital in New York City, killing another doctor and wounding six people before turning his gun on himself.

Putting out tweets before major news outlets had time to publish their stories meant that trolls could feed on public curiosity about breaking news. “That tweet got loads of traffic on search,” Spangher told BuzzFeed News.

Some of the trolls’ most successful content was not actually very incendiary. The post that got a traffic spike from Bing in March 2017 was a short inspirational piece about black women computer scientists, published on the site BlackMattersUS, set up by the Internet Research Agency and still active today.

This is typical of what Spangher, who is now working toward a PhD in computer science and machine learning at Carnegie Mellon University, calls the trolls’ “engagement funnel.” They seemed to have a strategy of pulling people in with neutral content with the hope of exposing them to more inflammatory posts later on. “They were playing a long game,” Spangher said.

How successful this strategy was in fomenting division remains unclear. Even though some tweets and Facebook posts went viral, the Microsoft researchers concluded that on any given day in 2017, only 1 in 40,000 Internet users actually clicked on Internet Research Agency content.

Because web browser log data is anonymized, it was hard to study how the content influenced individual people’s behavior. But the Microsoft research team could find no obvious correlations between places where there was heavy traffic to Russian troll content and real-world protests. And within browsing sessions, there was no significant effect on traffic to hyperpartisan news outlets or to sites soliciting political donations.

The full influence of Russian troll content will become clear only if the big tech companies collaborate to share their huge databases on user behavior. The Microsoft researchers couldn’t tell, for instance, if being exposed to Russian troll content changed people’s posting and commenting on Facebook.

“As the digital world continues evolve [sic], and risks to democracy continue to emerge, openness and cooperation among major stakeholders will be essential to understand and counter malevolent threats,” their paper concludes.

Russian online trolls remain active today, and are trying to influence next month’s US midterm elections. Last week, federal prosecutors filed charges against a Russian woman for her role in an ongoing social media election interference campaign. According to the indictment, Russian online trolls, again posing as US partisans on the left and right, were directed to attack mainstream media and prominent figures including special counsel Mueller, as well as fomenting division on topics from immigration to gun control.