Today, Instagram is rolling out new features aimed at making it harder to troll and harass users, and also at looking out for some of its most vulnerable users.

1. A big new change that’ll make it easier to eliminate creepers: You’ll be able to limit who can comment on your posts. There will be four options:

Everyone

People you follow

People who follow you

People who follow you and people you follow

This will only be available to public accounts. Previously, you could only block comments from people one by one, and it wasn’t possible to limit large groups of people.

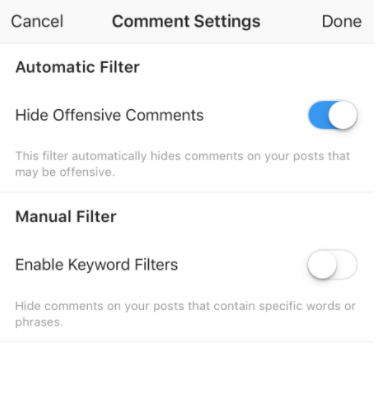

2. The “hide offensive comments” feature will now be available in French, German, Portuguese, and Arabic. This feature launched in English in June as a way of blocking certain words, but now it’s expanded into a more robust, AI-powered detection of the nuances of harassing comments.

To turn it on, go into the “Comments” section of the Instagram app, and toggle on the automatic filter:

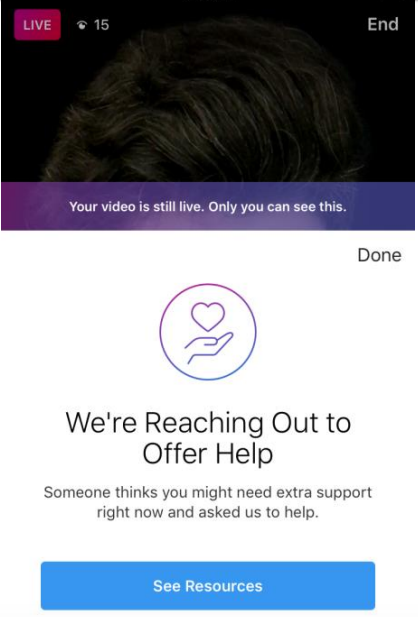

3. The last feature is an enhancement to the mental health and safety tool aimed at helping people who are posting about self-injury or suicide. Currently, when you report a post, Story, or live feed for self-harm or suicide content, the person will be shown a notification that says “we’re reaching out to offer help.”

Starting today, that menu will now show up during a livestream if someone is worried about you and reports your livestream for self-harm. Before, the menu would only show up after your livestream had ended, which might be too late. You can browse the options (anonymously) in the menu while livestreaming, and then seamlessly return to your stream.

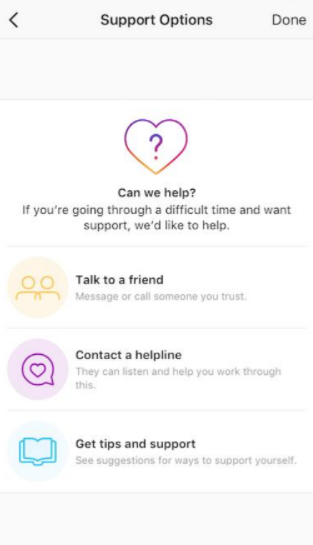

You can view resources that will suggest you should contact a friend, call a helpline, or get tips and support (the specific support links and helplines will vary by country).

This also means that to be effective, the reports need to be reviewed and processed by human moderators very quickly – while someone is still streaming. Instagram says of its moderation efforts, “we have teams working 24 hours a day, seven days a week, around the world to be there when people need us most. This is an important step in ensuring that people get help wherever they are — on Instagram or off.”