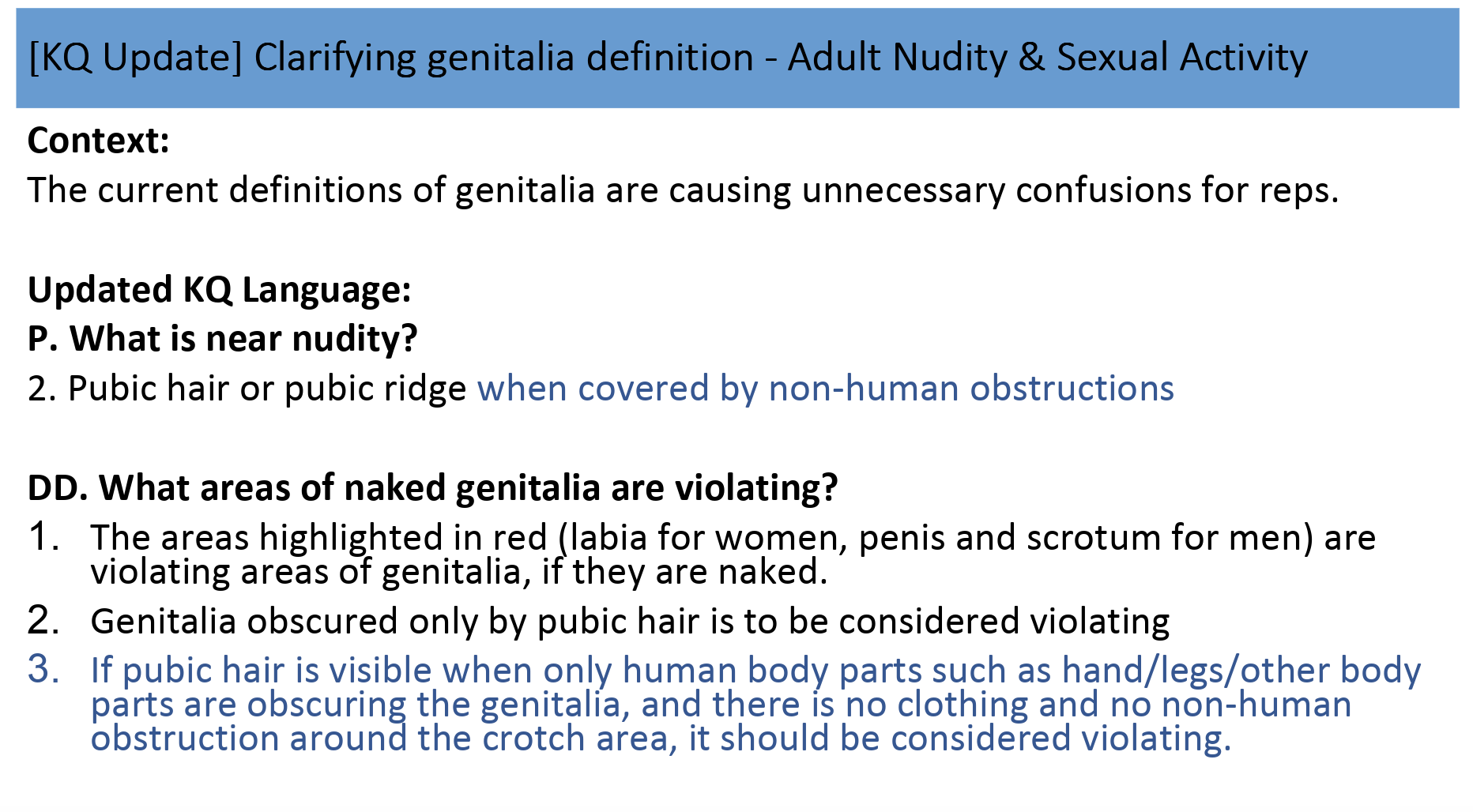

When actor Burt Reynolds died in 2018, fans across Instagram and Facebook paid tribute to him by posting his 1972 Cosmopolitan magazine centerfold. In the photo, a nude Reynolds reclines on a bearskin rug, his left arm strategically covering his crotch. While an iconic celebrity photo, the image violated Facebook's moderation rules. For one, it featured visible pubic hair (a no-no), and while Reynolds' penis was obscured by his arm, Facebook only allows nonhuman objects covering genitals.

So Facebook's third-party moderators followed the rules: Burt’s bush had to go.

Fans noticed their tributes being deleted, and they complained on Twitter, which led to media outlets reporting on the debacle. By the next day, Facebook had told the media that the photo was incorrectly removed by automatic nudity detection and that the photos would be restored.

However, it wasn’t until four days later that the teams of outsourced moderators who work at firms like Cognizant and Accenture were informed that Facebook had made an exception for Reynolds’ pubes.

But by then the news cycle around Reynolds' death was long over. The moderators had needlessly spent untold hours deleting a newsworthy photo and upsetting people trying to pay tribute on Facebook.

For moderators at Cognizant and Accenture, this was yet another instance of the confusing, often poorly explained rules and convoluted communication between them and Facebook that make their job nearly impossible. In interviews with BuzzFeed News, former and current moderators described policing content for Facebook as a grueling job made worse by poor management, lousy communication, and whipsaw policy changes that made them feel set up to fail. Hundreds of pages of leaked documents about Facebook's moderation policy were obtained by BuzzFeed News. The documents — which contain often ambiguous language, Byzantine charts, and an indigestible barrage of instructions — reveal just how daunting a moderator's jobs can be.

Facebook had made an exception for Reynolds’ pubes.

Moderating a platform of over a billion people is a daunting task — particularly at a global level. It’s unreasonable to expect Facebook to seamlessly police all the content that people post on it.

But Facebook is also a $533 billion company with nearly 40,000 employees. It’s had years to craft these policies. It has the incentives, the resources, and the time to get it right. And it’s trying. On Sept. 12, the company released an updated set of values that it said would guide the company’s community standards — including authenticity, safety, privacy, and dignity. In a statement to BuzzFeed News, a Facebook spokesperson said: “Content review on a global scale is complex and the rules governing our platform have to evolve as society does. With billions of pieces of content posted to our platforms every day, it’s critical these rules are communicated efficiently. That’s a constant effort and one we’re committed to getting right.”

But no matter the lofty principles, the moderators who spoke to BuzzFeed News say the outsourcing system by which Facebook governs the content on its platform is fundamentally broken.

“Premature death”

When the rapper XXXTentacion was shot and killed in June 2018, Facebook's third-party moderators weren’t sure how to handle the flood of content about his death. Some posts outright mocked his death, while others pointed to his arrest for domestic battery and false imprisonment against his pregnant girlfriend and criticized him for being an abuser.

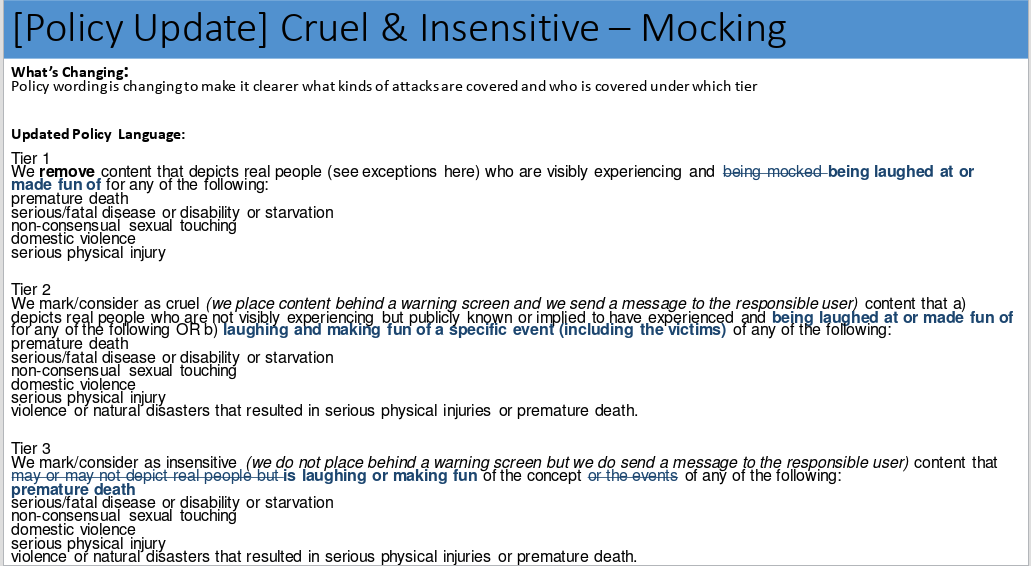

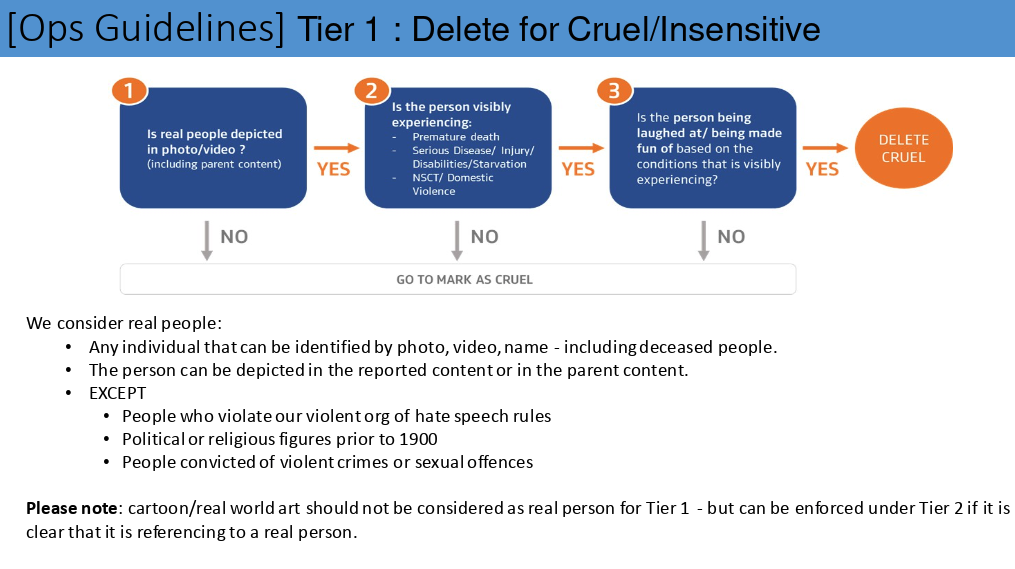

Facebook’s policies offer a variety of ways for moderating content that is “cruel and insensitive,” depending on context and severity. A moderator could delete the post altogether, "mark as cruel" (which blurs the image for others and sends a warning message to the user), "mark as insensitive" (which doesn't blur but sends a warning message to the user), or leave it alone. Typically, mocking someone’s death would get a “mark as cruel,” but the rules weren’t clear on how to handle this unusual case.

Moderators at Cognizant raised concerns about it to team leaders, who told them to continue to “mark as cruel” any mocking content, even if suggested the rapper was an abuser. “Some people had completely valid posts like ‘fuck domestic abusers like X’ deleted because we thought that counted as mocking somehow,” a former moderator told BuzzFeed News.

Eventually, those leaders at Cognizant flagged it to Facebook as a trend they were seeing, and Facebook told them to do the opposite: XXXTentacion was exempt from the rule that forbids mocking his death because he was a “violent criminal.” Mocking his death was allowed.

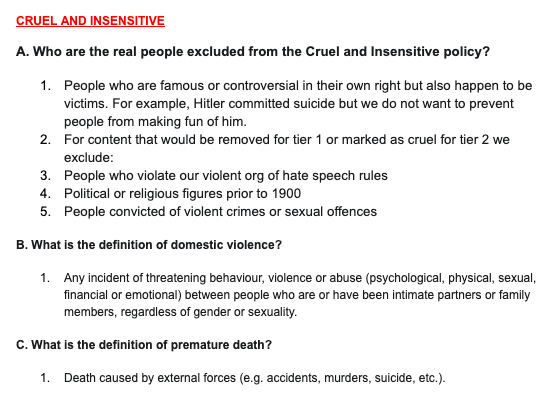

So the mods stopped marking posts that condemned his domestic violence as well as just plain mocking ones as “cruel.” But they were still confused. Facebook’s rules say that people convicted of a violent or sexual crime are exempt from the rules forbidding cruel mocking. But XXXTentacion died awaiting trial — he was never convicted.

"Since he was known to be exempt, it went shockingly far in the other direction. People were allowed to make cruel jokes about murder generally,” one former moderator explained. “And because the punchline was XXXTentacion, it was allowed.”

Another former moderator said, “I remember it caused a lot of tension.”

Two months later, the death of John McCain, a war hero, Arizona senator, and the 2008 Republican nominee for president, inspired a flood of reactions — some of them memes mocking his brain tumor. Once again, Facebook's third-party moderators weren’t sure what to do. The issue wasn’t whether the memes were tasteless, but whether McCain’s death was “premature.” Under Facebook’s policies, mocking a death is moderated differently than mocking a “premature” death. The senator was 81 years old and died of an illness, but moderators weren’t sure if that qualified as premature.

“We had no set definition of ‘premature death,’” a third-party moderator told BuzzFeed News of Facebook policy at the time of McCain’s death. “I just think people were considering all deaths to be premature. We also applied it to Aretha Franklin, for example.” (Franklin was 76 years old when she died of a tumor in her pancreas.) Another moderator who worked on appeals recalled debating in their office whether the fact that McCain was over the average life expectancy for American men meant it couldn’t be considered premature.

Confusion around moderating posts responding to McCain’s death was made worse by another Facebook rule forbidding mockery of someone with a serious illness. Moderators marked these posts as cruel as well, including, for example, an Onion headline that read, “Trump Bestows Medal Of Honor On McCain’s Tumor.”

Moderators who spoke to BuzzFeed News said that only after McCain’s nationally televised funeral did higher-ups at Cognizant ask Facebook to explain just what it meant by “premature death.” A few months later, the company added a new definition to its official policy: “Death caused by external forces (e.g. accidents, murders, suicide, etc.).”

The verdict was in long after it was most needed: mocking McCain for dying was allowed, but mocking him for having cancer was not.

“A communication bottleneck going both directions”

For moderators struggling to do good work under conditions described as PTSD-inducing, Facebook’s mercurial and ever-evolving policies added another stressor. Many who requested anonymity for fear of retribution described to BuzzFeed News a systemic breakdown of communication between Facebook’s policymakers, the Facebook employees charged with communicating that policy, and the senior staff at the outsourced firms charged with explaining it to the moderation teams. Sometimes the outsourcing firms misinterpreted an update in policy. Sometimes Facebook’s guidance came far too late. Sometimes the company issued policy updates it subsequently changed or retracted entirely.

“From an outside perspective, it doesn’t make sense,” a Facebook moderator at Accenture told BuzzFeed News. “But having worked the system for years, there’s some logic.”

The real problem, moderators say, are the cases that don’t fall squarely under written policy or test the rules in unexpected ways. And there are many of them.

“These edge cases are what cause problems with [moderators],” another moderator explained. “There’s no mechanism built in for edge cases; Facebook writes the policy for things that are going to capture the majority of the content, and rely on these clarifications to address the edge cases. But that requires quick communication.”

That communication can sometimes take days. Last Christmas, moderators at the outsourced sites, which remained open, saw Facebook's response time slow so dramatically that many believed the Facebook teams that oversaw their work had been given the winter holidays off. Facebook did not answer questions about holiday staffing.

Facebook develops moderation policies itself, but refines them with help from another group of outsourced workers at Accenture. Based in Facebook’s Austin offices, this team reviews moderation decisions made by both humans and AI to look for inconsistencies that might suggest a policy should be made more clear or changed. But even those workers say it’s a struggle to collaborate with Facebook — even inside the company’s own offices. “It’s a communication bottleneck going both directions,” one current Accenture worker told BuzzFeed News.

A spokesperson for Accenture declined to comment, referring questions to Facebook.

For the rank-and-file moderators at Cognizant facilities, who rarely communicate directly with actual Facebook employees, the bottleneck is even worse. When they have a question about a policy that isn’t clear, team leaders would log it in a spreadsheet of queries to be put to Facebook. But current and former moderators who spoke to BuzzFeed News said the questions on that spreadsheet would often go unanswered. Sometimes an individual moderator is given an answer that should have been more widely shared, and the question is asked again. And again. Since moderators cannot communicate directly with Facebook, their questions are filtered through their Cognizant superiors, which adds another layer of murkiness to everything.

“All information was filtered between the client and the contractor and then down to us,” a former moderator told BuzzFeed News. “Nothing is ever clear from our perspective. Most people who were on my level of the job don’t really distinguish/understand when clarifications come from Facebook versus someone internally at Cognizant.”

Tarleton Gillespie, author of Custodians of the Internet and a senior principal researcher at Microsoft Research, told BuzzFeed News, said experiences like these are inevitable when moderating a platform that grew very rapidly to over 2 billion users.

“Facebook has had to build out this massive apparatus for content moderation so quickly, their priority has been getting as many bodies in place as quickly as possible,” Gillespie told BuzzFeed News. “They’re only now catching up on the logistics, like how to distribute clear and updated guidance on how to handle specific problems, especially ones that break quickly and unexpectedly.”

But that’s only one facet of the issue. “There’s a second problem,” Gillespie explained. “Facebook pretends like there are clear policies and decisions at the top, that the challenge is simply how to convey them down the line. That’s not how content moderation works. The policies aren’t clear at the top, and moderators are constantly being tested and surprised by what users come up with.”

Cognizant moderators receive four weeks of training on Facebook’s policies before they are put to work. Once training is over, they begin work by reporting to a different layer of Cognizant leadership called the policy team, which is responsible for interpreting Facebook’s rules. The company did not respond to questions about the training members of this team are given. Moderators who spoke to BuzzFeed News said the policy teams they work with typically consist of about six to seven people, including those who oversee the Spanish-language moderators.

Facebook moderators working at Cognizant’s Phoenix location told BuzzFeed News there is just one middle manager who is mostly in charge of interpreting Facebook’s policies — a single guy who doesn’t work for Facebook but is charged with interpreting the nuance of its policy shifts for moderators struggling to police the platform’s fire hose of content. “The entire operation is leaning on one guy’s understanding,” one moderator told BuzzFeed News.

Cognizant did not respond to requests for comment.

“Far harder than any class I took in college”

To be fair, there is a lot of nuance for Facebook to communicate when it comes to content moderation. That’s painfully apparent in the bimonthly policy updates the company distributes to outsourcers like Cognizant. These typically arrive as 10- to 20-page slideshow presentations and cover small tweaks in policy; flag new kinds of harassment, bullying, hate speech, and hate groups; and provide updates on content that should be preserved or removed. On their own, the updates seem to make sense. But taken together, they reveal the endless game of whack-a-mole Facebook plays with hate speech, bullying, crime, and exploitation — and the rat’s nest of policy tweaks and reversals that moderators, who are under extreme pressure to get high accuracy scores, are expected to navigate.

“It does seem all over the place and impossible to align these guideline changes,” said a current moderator. “Say you’re reviewing a post where the user is clearly selling marijuana, but the post doesn’t explicitly mention it’s for sale. That doesn’t meet the guidelines to be removed. ... But then later an update will come up saying the ‘spirit of the policy’ should guide us to delete the post for the sale of drugs. Which is why no one can ever align what the spirit of the policy is versus what the policy actually states.”

“Understanding and applying this policy is far harder than any class I took in college.”

BuzzFeed News reviewed 18 such policy updates issued between 2018 and 2019; topics they addressed included sexual solicitation of minors, animal abuse, hate speech, and violence, among a host of other things. For example, an update about the sale of animals is tucked into another update that outlines broad changes to how Facebook moderates posts about eating disorders and self-harm. “It’s just not something $15/hour employees are equipped for,” said a former Cognizant moderator. “Understanding and applying this policy is far harder than any class I took in college.”

Beyond the endless policy tweaks is the material that moderators are charged with reviewing.

Earlier this year, the Verge published a pair of investigations in which Facebook moderators at Cognizant's offices in Phoenix and Tampa, Florida, described experiencing PTSD-like symptoms after working on Facebook posts. A quick review of a Facebook policy update deck from December 2018 offers a good idea why.

Slide 1 advises that the barf emoji — which earlier in the year had been designated as a form of hate speech in the appropriate context — is now also classified under Facebook’s rules against bullying.

Slide 2 is a new update about how to handle images of dead suicide bombers.

Slide 3 is a minor update to change in language about cannibalism images (images of just a cannibal without the victim will now be allowed).

Slide 4 clarifies the rules about adults inappropriately soliciting children.

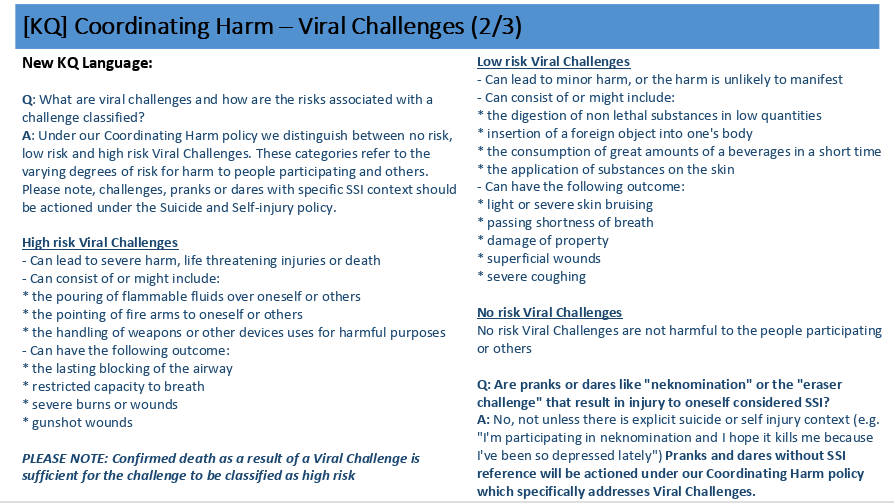

Slide 5 adds new rules for taking down images or videos of viral challenges that cause harm (text and captions about the challenges are acceptable if they’re condemning the challenge).

Slide 6 gives more details on what constitutes a “high-risk viral challenge” (pouring flammable liquids on yourself or handling guns) versus a low-risk viral challenge (light or severe bruising, property damage, superficial wounds, severe coughing).

And on it goes: slides about moderating hate organizations, updates on classifying banned content from terrorist groups, how to handle ads for prostitution in Facebook Groups. Read individually, the updates often make perfect sense. But taken as a group, they are a disorganized mess of the mundane (barf emoji) and the truly horrific (dead suicide bombers) that current and former moderators said they struggle to parse and digest.

On June 25, a heartbreaking photo of a Salvadoran father and toddler daughter who drowned while trying to enter the United States went viral. Within the hour, it was the top news story of the day; the next night, it was discussed in the Democratic presidential debate. But Facebook’s third-party moderators weren’t sure how to handle it.

Facebook policy says that images depicting violent death should be “marked as disturbing,” which would put a blurred overlay on the image that a viewer would have to click through to see.

‘Well, you know, it’s drowning, but someone didn’t drown them, so why would it be a violent death?’”

The question was whether their tragic drowning would be considered a “violent” death, per Facebook’s rules. “I overheard someone saying that day, ‘Well, you know, it’s drowning, but someone didn’t drown them, so why would it be a violent death?’” a moderator told BuzzFeed News.

At 4 p.m. Arizona time, over 24 hours after the photo was first published, leaders at Cognizant posted their guidance: The photo should be considered a violent death and marked as disturbing. The next morning, leaders issued another update, reversing a newsworthy exception for a famous photo of a Syrian boy who had drowned in 2015. That photo should now also be marked as disturbing and receive the blurred overlay.

Sept. 6 was the one-year anniversary of Burt Reynolds’ death. I tried posting his iconic nude photo to Instagram. Within seconds of posting, my image was taken down by bots, which Facebook could use one day to replace the human moderator entirely. I sent out an appeal, and Burt was restored. The system worked; the humans on the outsourced appeals team remembered the exemption for Burt’s beefcake centerfold.

Writing flawless moderation policies at this scale is simply an impossible task, and there will always be news events that set new standards. But the practice of outsourcing moderation reveals unforced errors, especially noticeable in those news events. Facebook’s policies are only as good as they can be executed by the most rookie moderator on the job — and the worst communication bottlenecks at the outsourced facilities.

"The tech industry get treated as if their cases are sui generis, but in reality they're just corporate entities doing the same things other corporate entities have done.”

“This is the outcome of a system that is fractured and stratified organizationally, geographically, structurally, etc.,” said Sarah T. Roberts, assistant professor of information studies at UCLA and author of Behind the Screen, a book about social media content moderators. “It’s not as if social media is the first industry to outsource. The tech industry get treated as if their cases are sui generis, but in reality they're just corporate entities doing the same things other corporate entities have done. When a firm like H&M or Walmart has a global production chain, and a factory collapses in Bangladesh, they can reasonably claim that Gosh, we didn’t even know our clothes were made there because their supply chain is so fractured and convoluted. I'm not alleging that Facebook and other social media companies have deliberately set up that kind of structure. I don't have any evidence to support that. But what I do know is that there are pitfalls in such a structure that one could reasonably foresee.”

Facebook says it's working hard to improve communication between its in-house policy and moderation teams and its third-party moderators. It recently implemented a new way for outside mods to suggest policy changes and whatnot to the company. Facebook touts the move as giving moderators the direct line to the social network they've long needed. Maybe it is.

And even if it isn't, it's a start. Facebook clearly needs to do something; its current system is untenable. And that's apparent to people whose job it is to use it, and to people whose Facebook (and Instagram, which Facebook owns and has the same moderation rules) experiences are affected by it.

As one third-party Facebook moderator told BuzzFeed News, “For a social media company that prides itself on helping humans communicate, they have a really, really shitty time communicating with each other internally.” ●