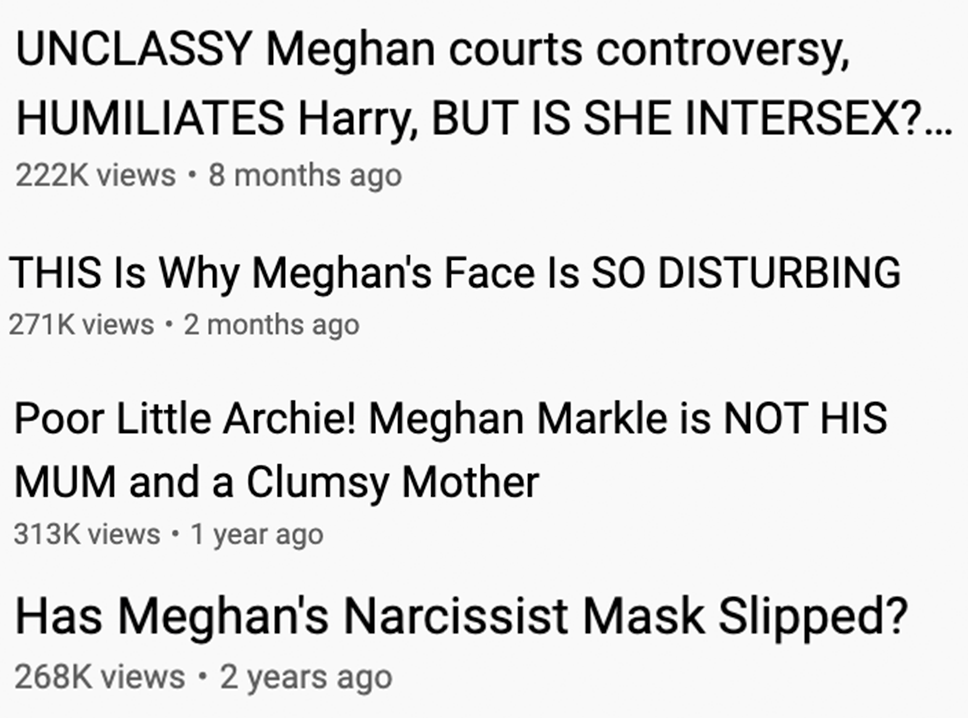

As recently as a week ago, searching “Meghan Markle” on YouTube would bring up videos explicitly attacking the Duchess of Sussex or spreading misinformation about her within the first 10 results. When you’d click through to videos like “CONFIRMED! Samantha Markle Claims Archie & Lilibet Are Fake, Meghan Was NEVER pregnant,” YouTube’s recommendation algorithm would bring up even more content from an interconnected community of channels with names like “Murky Meg” and “Keep NYC MegaTrash Free.” These accounts use the video platform to spread negative content about the duchess, and have garnered tens of thousands of subscribers and millions of views.

But now you’ll find only videos from verified accounts and news outlets in the search results for “Meghan Markle” and first recommendations in the sidebar. Even if you’ve explicitly searched for and started watching videos that accuse Meghan Markle of being a narcissist, or videos claiming she wore a fake belly to make herself appear pregnant, YouTube’s recommendations sidebar won’t initially serve you similar videos. It's unclear when exactly the change occurred, and YouTube declined to specify.

This change comes following months of news coverage about the anti-Meghan YouTube network and the ad revenue its videos generate, as well as a public conversation about what constitutes criticism and when it crosses the line into harassment. BuzzFeed News has been inquiring about various accounts and YouTube’s policies for more than a month, and an Input magazine story about the battle between Meghan supporters and YouTube channel creators was published Monday.

However, a spokesperson for the platform pushed back when BuzzFeed News asked if media coverage of this community had prompted such a marked, sudden change in search results.

“For news and certain topics prone to misinformation, we’ve trained our systems over the years to elevate content from authoritative sources in search results and recommendations,” spokesperson Elena Hernandez said. “Millions of search queries get this treatment today, including certain queries related to Meghan Markle. As with all queries, search results on YouTube are algorithmically ranked and constantly changing as new content is uploaded over time.”

And yet the change in the search algorithm only seems to apply to the query of the duchess’s name. If you search “Meghan Markle pregnancy” or “Meghan Markle Harry,” some of the first results will be negative videos promoting conspiracy theories from unverified anti-Meghan channels. If you do click on one of these anti-Meghan videos, the recommendations algorithm continues to suggest videos from verified sources first; for the most part, one has to scroll to see other videos from channels that post only negative content about the duchess.

YouTube’s search and recommendations algorithms constitute some of YouTube’s most powerful content moderation tools, said journalist and YouTuber Carlos Maza, whose highly publicized experience as the victim of targeted harassment from a conservative YouTuber in 2019 was one of the factors that prompted the platform to adopt its current set of harassment and cyberbullying guidelines. However, he told BuzzFeed News that these changes may not have an effect on the anti-Meghan YouTube ecosystem because the approach is likely “too little, too late.” It shows the platform “misunderstands how hate mobs work on YouTube,” he said.

Maza said that this change may keep hate videos away from a “lay audience” because it will minimize the chance that casual viewers will stumble onto anti-Meghan videos — but it won’t stop “hate-driven” users who use charged keywords to reinforce their existing views. “Just like in physical life, hate mobs don't grow by appealing to a wide audience, but by relying on a core base of active participants who will seek out and recruit other people who might be inclined to agree with them,” he said.

Historically, YouTube’s recommendations had been entirely optimized for engagement, which had the unintended effect of promoting and sending viewers to the most extreme content and starting them on the path to, for example, the alt-right movement. BuzzFeed News has published extensive reporting on how YouTube’s search and recommended videos algorithms can lead to right-wing radicalization.

The algorithmic changes appear to be YouTube’s primary way of addressing the extensive community of channels whose stated sole purpose is to attack Meghan, as well as accounts that claim to be royal commentary channels but, based on the majority of their content, are de facto anti-Meghan accounts. The changes help contain the conspiracy theory–laden narratives about the duchess and may keep them from seeping into the wider waters of the platform. And YouTube will be able to avoid defining what counts as a hate account and when legitimate criticism crosses a line into malice.

Although Sussex fans have raised questions for years about why anti-Meghan accounts were platformed and monetized, the scrutiny of royal YouTube picked up steam in January, when social media analytics company Bot Sentinel published a report examining anti-Meghan content across various online platforms. The report was the third in a series about what the company dubbed “single-purpose hate accounts,” profiles that appeared to be on the internet only to attack a certain individual — in this case, the Duchess of Sussex.

In the report’s section about YouTube, Bot Sentinel identified 25 channels whose videos “focused predominantly on disparaging Meghan.” These channels, according to the report, had a combined overall view count of nearly 500 million, and Bot Sentinel estimated that, collectively, the accounts have generated approximately $3.5 million from ad revenue over their lifetimes. (A number of the creators of the channels identified in the report disputed the company’s estimates but so far have declined to publicly share their earnings.) The report called on the platform to remove the channels, citing YouTube’s harassment and cyberbullying policy, which explicitly states that “accounts dedicated entirely to focusing on maliciously insulting an identifiable individual” are examples of content it does not allow.

And yet to the frustration of many Sussex fans (and the glee of Meghan and Harry haters), YouTube has, so far, removed only one anti-Meghan channel. (Another channel was temporarily removed, but as Input magazine reported, it was restored.)

This is because YouTube’s current community guidelines have a significant vulnerability that allows for targeted harassment and, often, the spread of misinformation about an individual without breaking the platform’s rules. Anti-Meghan channels are platformed — and many are monetized — due to the company’s definition of what you need to attack about a person for it to count as harassment, hate speech, and cyberbullying.

According to YouTube’s terms of service, in order to be considered “content that targets an individual with prolonged or malicious insults,” the insults must be based on “intrinsic attributes,” which the company defines as “physical traits'' and “protected group status.” This protected group policy lists 13 attributes that cannot be attacked: age, caste, disability, ethnicity, gender identity or expression, nationality, race, immigration status, religion, sex/gender, sexual orientation, victims of a major violent event and their kin, and veteran status.

YouTube’s rules indicate that everything else, including attacks based on falsehoods and potentially defamatory content, is fair game. And thus the platform hosts conspiracy videos that falsely imply that Meghan is intersex or provide “evidence” that she engaged in sex work before meeting Prince Harry — and these videos have more than 100,000 views.

In an emailed statement, YouTube reiterated exactly what types of attacks qualify as harassment and hate speech. “We have clear policies that prohibit content that targets an individual with threats or malicious insults based on intrinsic attributes, such as their race or gender,” spokesperson Jack Malon said.

Part of the issue, Maza said, is that most content that is racist, anti-LGBTQ, xenophobic, or otherwise hateful that is targeted at a person based on “intrinsic attributes” is insidious. Indeed, much has been written about the “racist undertones” of the UK media’s coverage of Meghan.

“Hate speech is always implicit,” Maza said. “Good hate speech, good bigoted propaganda, dabbles in euphemism, stereotypes, a wink and a nod. It’s always suggested or alluded to. If your approach to moderating speech is that there has to be a clear rule, you’re never going to have a good policy. The focus must be implicit bias … Violence and explicit bigotry come from implicit bigotry.”

Most anti-Meghan YouTubers have proved to be good at toeing YouTube’s line when it comes to their videos, using coded words like “uppity” and “classless” to describe Meghan, or playing into the angry Black woman trope by portraying her as someone who regularly throws tantrums.

In an ironic twist, it is one of the largest pro-Meghan channels, the Sussex Squad Podcast, that regularly posts content in flagrant violation of YouTube’s harassment policy; a number of their videos contain attacks on intrinsic attributes of members of the royal family and royal reporters. (As of publication time, the account was still on YouTube, but the majority of their videos had been removed from the channel. A YouTube spokesperson told BuzzFeed News on Friday that they had removed one video for violation of the platform’s harassment policy.)

The only prominent anti-Meghan channel that YouTube has permanently removed so far is from a creator known as “Yankee Wally,” whom BuzzFeed News interviewed in a previous story. Before the channel was removed, YouTube had hosted hundreds of Yankee Wally’s videos promoting conspiracy theories about the Duchess of Sussex for four years, including videos such as “Meghan Markle is uncultured, uncivil spoilt and rude. But she CAN see 500 yds with her rat-eyes.”

Public figures can fight back against videos that contain falsehoods. Rapper Cardi B recently won a $4 million defamation lawsuit against YouTuber TashaK, who had made videos claiming that the entertainer contracted herpes, prostituted herself, cheated on her husband, and had done hard drugs. The day after her legal victory, Cardi B tweeted, “I need a chat with Megan Markle.”

I need a chat with Megan Markle.

Even though a federal judge had ruled Tasha K’s videos to be defamatory, Cardi B still had to obtain an injunction forcing her to remove them from her YouTube channel — as they still did not violate the platform’s terms of service.

Maza told BuzzFeed News that YouTube’s current policy is, at best, “lip service.” “The policy they created is less a rule against harassment; it’s more a specific set of metrics or qualifiers harassers have to achieve to avoid penalties.”

In June 2019, as a journalist for Vox, Maza made a video compiling all of the times that conservative vlogger Steven Crowder had used anti-LGBTQ language in YouTube videos to harass him. In response to questions about Crowder’s cyberbullying of Maza, YouTube said at the time that Crowder was only expressing his “opinion” and that his use of “hurtful language” (such as calling Maza a “lispy queer”) was acceptable, saying, “Opinions can be deeply offensive, but if they don’t violate our policies, they’ll remain on our site.” Following massive public backlash, the platform retracted its statement and demonetized Crowder’s channel. In December of that year, it introduced its current set of harassment and cyberbullying guidelines.

An obvious counterargument to questions about anti-Meghan harassment accounts is that she’s a public figure and not immune from criticism. According to YouTube’s guidelines, there are some exceptions to the hate speech policy for high-profile figures. The platform may allow harassment “if the primary purpose is educational, documentary, scientific, or artistic in nature,” according to the guidelines. That includes “content featuring debates or discussions of topical issues concerning individuals who have positions of power.” The platform cautions, however, that these exceptions “are not a free pass to harass someone.”

But as Meghan herself said in an interview, there’s a difference between criticism, which she says she and her husband welcome, and abuse. “If things are fair, [criticism] completely tracks for me,” she told reporter Tom Bradby in October 2019. “If things are fair. If I did something wrong, I’d be the first one to go, ‘Oh my gosh, I’m so sorry, I would never do that.’ But when people are saying things that are just untrue — and they’re being told they’re untrue, but they’re still allowed to say them — I don’t know anybody in the world that would feel like that’s OK. And that’s different than just scrutiny.”

Maza pointed out that allowing this kind of bad faith abuse hurts YouTube as a whole. “From a pure health-of-the-ecosystem standpoint, there is no meaningful, logical, or ethical explanation that a high-profile harassment campaign against a royal is conducive to the media ecosystem. It either poisons the platform or it doesn’t.

“The rules are important because this kind of harassment on a large scale affects every user on the platform,” he said. “YouTube rewards cruelty.”

Imran Ahmed, CEO of the Center for Countering Digital Hate, a nonprofit campaigning against online hate and misinformation, echoed this point in an interview with BuzzFeed News, saying that on platforms like YouTube, “Hate speech is the most profitable type of speech.”

Ahmed said, “The monetization of hatred is now a well-established business model.” It is particularly lucrative, he said, because it benefits from the way YouTube and other platforms amplify and provide “competitive advantage to speech that inspires emotional responses.”

“That in itself is a dynamic that doesn’t just affect Meghan Markle; it affects all of society,” Ahmed said.

YouTube’s removal of videos from anti-Meghan creators in the duchess’s most obvious search result, combined with the deranking of this content in recommendations, provides some indication that the platform acknowledges the hate-focused channels it hosts. But Maza said that this measure will likely have no effect on the anti-Meghan community it hosts. “By the time YouTube takes action, those bad actors have already built loyal subscriber bases — a problem that we've seen replicated on YouTube with conspiracy channels after mass shooting events and contested elections.

“It’s like winning the battle and losing the war,” he said. “YouTube would prefer to spend hours dancing around policy questions when the real question is, ‘When do you shut down shit, and why haven’t you done it?’”