Earlier this week, as a follow-up to the ACLU's excellent report on Amazon’s facial recognition tool, Rekognition, BuzzFeed News decided to run a few experiments of our own. Among the tests, we ran photos of the FBI's historical Most Wanted list (507 photos) and the National Institute of Standards and Technology's mugshot identification database (3,248 photos) through Amazon’s celebrity recognition tool — a feature Amazon introduced in June 2017, six months after the broader Rekognition platform launched. (Amazon, as you’ll see below, says this was a very silly thing to do.)

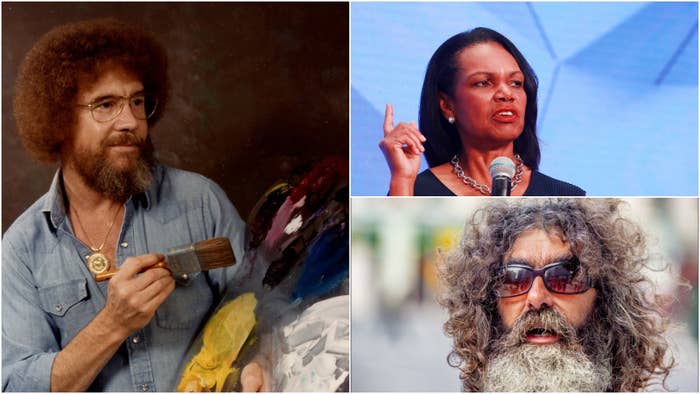

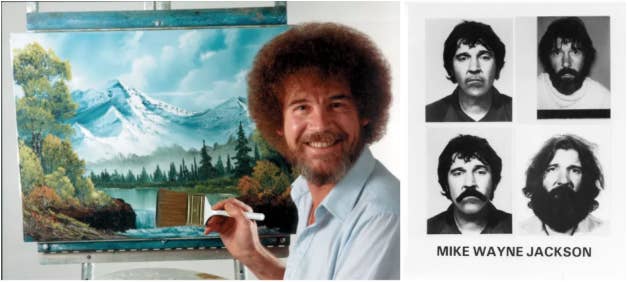

Things got weird. On the FBI test, Amazon’s tool returned 17 matches that identified criminals as celebrities with 95% to 100% confidence — among them the late Grateful Dead guitarist Jerry Garcia, Sons of Anarchy actor Kim Coates, the late television painting instructor Bob Ross, and Darryl McDaniels, a member of Run DMC. In our second test, using NIST’s mugshot database, the celebrity recognition tool matched, with 96% confidence, the arrest photo of a black man to former secretary of state Condoleezza Rice.

What was interesting here were less the mismatches than the high confidence scores. Confidence scores are common in facial recognition. They are a “percentage match score” that is “a factor of the system’s algorithm,” according to the Handbook of Face Recognition. “If it means anything at all, it is a measure of the certainty in the match by the system; this is the equivalent of an eyewitness saying they are 75% sure that the image depicts the person they observed a the crime scene.”

We didn’t know what to make of the fact that Amazon’s celebrity recognition tool was matching Bob Ross, the late ASMR-inducing television painter, to suspected triple-murderer Mike Wayne Jackson, with 97% confidence. (“Yikes,” Joan Kowalski, president of Bob Ross Inc., told BuzzFeed News.) We went in thinking the celebrity recognition tool uses the same confidence scoring technology as Amazon’s other facial recognition tools (same platform, right?).

Turns out, it doesn’t. Amazon told us that celebrity recognition is completely different from the facial recognition on its Rekognition platform, from its underlying technology to its customer base. Celebrity recognition, Amazon said, consists of a pre-trained model designed to return many more lookalikes to, say, Johnny Depp dressed up in different roles. The tool is designed for entertainment, social media use cases, and other fun applications, the company said. Amazon, in fact, denied it was a facial recognition system, calling it a “classification” system instead. It’s a system that might identify Jack Sparrow as Johnny Depp, even with the heavy makeup and the elaborate costume, “with high confidence,” according to Amazon.

In other words, Rekognition would likely not return matches with the same “high confidence” if photos of Jack Sparrow and Johnny Depp were fed into Amazon’s face recognition system, versus its celebrity recognition system, according to the company. Face recognition, Amazon said, involves exact visual similarity between two faces.

But you wouldn’t know the celebrity recognition tool was any different by reading Amazon’s documentation or its marketing. Now, this isn’t a huge problem, but it’s messy — messy enough that Amazon, which had itself been conflating the two, made significant changes to its Rekognition documentation after BuzzFeed News contacted it. Those changes now make it clear that the celebrity recognition tool is architecturally different from its facial recognition tool.

Amazon declined to comment on these changes, but a spokesperson did say this: “As we shared with BuzzFeed, this is a silly article that seems more designed to drive clicks than to educate people who use machine learning. To use a celebrity detection model — that is trained to recognize celebrities including movie actors who frequently disguise themselves to look like others (including criminals) and that is optimized to return celebrity matches — for anything other than trying to detect celebrities is just silly. It’s certainly absurd for doing anything where the integrity of the match is critical like in law enforcement.” **

There are two things at issue here. The first is the appearance of false positives on Amazon’s celebrity recognition tool. Amazon says we shouldn’t worry about this because confidence scores don’t work the same way on its celebrity recognition tool and its facial recognition tool — even though they are part of the same Rekognition platform. For example, hypothetically, comparing two pictures — like Johnny Depp playing Jack Sparrow vs. the real Johnny Depp — on Amazon’s celebrity tool might return a 95% confidence or higher, because the tool is designed to work that way; while on Amazon’s face recognition system, the AI would return a much lower result, around 60% confidence, when comparing the same two pictures. Amazon says customers using its celebrity tool want it to return results even if that means more of them are false positives.

“If they’re different services, it wouldn’t be fair to compare them,” Joshua Kroll, a computer scientist at the School of Information at UC Berkeley, told BuzzFeed News. “But if they present it all under one brand, then as a consumer you just think you know how it works — and that’s confusing at best.”

“Amazon is not particularly open about how its system works or what the confidence score even means with respect to the model. The company just says that higher scores are better.”

None of this was apparent until we began poking around and asking questions, and this raises the second issue: Facial recognition is playing a growing role in law enforcement — Amazon itself is a player in this — yet most systems are not at all transparent or open to outside researchers, and we end up having to take private companies at their word about how these technologies work, as we do in this very story.

Kroll said confusion around Amazon’s facial recognition capabilities could be mitigated if the company would disclose more details around how it builds and runs its AI recognition tools. The inscrutability in the system is “the company’s own choice,” he said. It’s proprietary technology, and Amazon considers it a trade secret. In essence, Amazon’s facial recognition platform is a black box.

“Amazon is not particularly open about how its system works or what the confidence score even means with respect to the model,” he said. “The company just says in its documentation that higher scores are better, with scores going from 0 to 100.” (After the ACLU’s report last week, Amazon recommended setting a confidence threshold of 95% and higher when using Rekognition. A day later, the company nudged that up to 99% for sensitive applications of its face recognition tools, such as law enforcement activities.)

And yet these confidence scores, Kroll told BuzzFeed News, are inherently meaningless. “Confidence scores are very specific to the AI model you’ve built, and the way you’ve trained it,” he said.

Clare Garvie, an associate at Georgetown Law’s Center on Privacy and Technology, agreed. Someone looking at a 99% confidence score may think that the result means the machine is 99% confident the photo is a match to the person they’re trying to identify, to the exclusion of all other people. “That’s not what it means,” Garvie said.

Instead, confidence is “the system’s own confidence in its ability to match two faces,” she explained. It’s entirely dependent on what the developers have told the system to match any input to.

But a machine telling a user it is 99% confident? It’s very suggestive, said Garvie. “Confidence scores are very problematic in that what they suggest isn’t necessarily what they mean,” she said.

In the police context, Garvie said it is “far from clear” that law enforcement agents or other operators of a facial recognition system understand this. “When faced with a match and a possible score, how it’s interpreted comes down to how the officers are trained,” she said. “I’m concerned that a system that suggests to an agent whose job is to look at two photos of a person and determine — ‘Are these people the same, are these people sufficiently similar to conduct an investigation?’ — will be biased in favor of matches where the system produces a high confidence.”

In the hands of law enforcement, facial recognition could be used to identify suspects and persons of interest in a criminal investigation (to be fair, it could also be used to find missing children or victims of human trafficking, as Amazon often points out), and the systems, which tend to be as much of a black box as Amazon’s tech, can’t be interrogated for potential embedded biases. Police could use the technology to surveil people at a public protest, and in fact have already done so. Facial recognition could even chart people’s movements through a town based on where their faces show up on camera.

And when your face is already in a facial recognition system, it can be hard to be sure where it ends up. Amazon says it “may store” images processed by the service “solely to provide and maintain the service” and “improve” Amazon Rekognition. But there may still be a danger in third-party users abusing the system.

In July, EdWeek reported on a mobile app called RealTimes, which created slideshows from photos and videos on users’ smartphones. The app’s parent company, RealNetworks, reportedly used the data collected from the smartphone app to create a database of 20 million faces, which RealNetworks used as a “training set” to teach AI systems how to recognize individual faces. From the data, the company developed a new facial recognition software called SAFR, which it is now reportedly encouraging K-12 schools to adopt for security and as a prevention measure against school shootings.

Garvie said she didn’t know whether the same thing was possible with Amazon’s facial recognition tools. “But,” she told BuzzFeed News, “it has happened before.”

** Point taken! But Amazon’s quiet update to its documentation does seem evidence of the company’s failure to clearly explain the differences between features of a single platform that promises to provide “highly accurate facial analysis and facial recognition” for a variety of applications. We were just examining the confidence scoring of two tools on the same Rekognition platform.