In early May, a sales representative for an esports streaming site called DingIt.tv sent an email to let people in the ad industry know his site has lots of traffic and was looking for partners to help monetize it with video ads.

“DingIt.tv is a global eSports gaming platform with 30m monthly active users and 350m video avails,” the rep wrote in an email provided to BuzzFeed News by an executive who received it.

DingIt does have impressive traffic. Alexa, a web analytics service, currently ranks it as one of the top 200 sites in the US. SimilarWeb says DingIt attracts more monthly visits than the websites for the Boston Globe, Atlanta Journal-Constitution, Nascar, and the heavily advertised travel site Travelocity.com.

In the email, the sales rep made a point to address any concerns about the quality of DingIt’s traffic. He wrote that three verification companies measured the site’s traffic and found that “under 3%” of DingIt’s traffic is attributed to bots.

Bots are widely considered to be the scourge of ad fraud. They can generate massive amounts of fake traffic and ad impressions by automatically clicking on websites and ads in large numbers, thereby siphoning off an estimated billions of dollars a year from the digital advertising industry.

"Like so many things on the internet, the technique traces some of its origins to porn."

DingIt may have a low level of bots coming to its site, but that doesn’t mean it’s safe from ad fraud. Reporting by BuzzFeed News and an independent investigation by verification firm DoubleVerify found that DingIt uses an increasingly popular method of generating fraudulent ad impressions and traffic that fools some verification companies precisely because it doesn’t involve bots. And like so many things on the internet, the technique and even some of the traffic used to execute this burgeoning method of ad fraud, traces some of its origins to porn.

“Unlike bot traffic of the past, this new type of fraud utilizes legitimate user-generated traffic to deliver thousands of fraudulent impressions,” Roy Rosenfeld, the VP of product management at DoubleVerify, told BuzzFeed News.

Rosenfeld said DoubleVerify classified DingIt as a fraud site according to industry definitions after a detailed investigation by his company’s ad fraud lab. BuzzFeed News and independent ad fraud researcher Augustine Fou separately documented the same activity.

Adam Simmons, the VP of content and marketing for DingIt, told BuzzFeed News that the site uses “4 independent traffic verification tools that monitor each view on our video player” and prevents any fraudulent traffic from viewing content or ads on the site.

“Should we identify patterns or sources of low quality traffic (such as a partner), we not only cease any commercial relationship with them but also block any traffic from those sources,” he said in an email.

Simmons then ceased communications with BuzzFeed News after being presented with information linking the original parent company and CEO of DingIt to a network of 86 sites referring fraudulent traffic to DingIt for more than a year, and after being sent the conclusions of the DoubleVerify report.

Traffic “out of thin air”

The technique used by DingIt, as well as a growing number of mainstream sites, is outlined in a presentation published today by Fou. He has for weeks been documenting what he calls “Bot-Free Traffic Origination Redirect Networks.”

Fou told BuzzFeed News these networks can “originate traffic out of thin air” and direct it to a specific site thanks to code that instructs them when to load a specific webpage, and how long to keep it open before automatically loading the next website in the chain. No human action is required to load webpages or redirect to the next site — it’s a perpetual-motion machine for web traffic and ad impressions.

Got a tip about ad fraud? You can email tips@buzzfeed.com. To learn how to reach us securely, go to tips.buzzfeed.com.

Ad fraud detection company Pixalate documented this activity in a recent investigation and dubbed the web properties using it “zombie sites” due to their ability to automatically generate traffic without human activity.

This form of ad fraud was also detailed in two recent BuzzFeed News investigations, which serve to highlight how the technique is growing in popularity and is now being used on more mainstream sites.

In one case, Myspace and roughly 150 local newspaper websites owned by GateHouse Media said they were unwittingly part of redirect networks that racked up millions of fraudulent video ad impressions. Both companies told BuzzFeed News the offending subdomains on their sites were managed by third parties, and that Myspace and GateHouse received no revenue from any fraudulent impressions. The pages have since been shut down.

“How were [these subdomains] getting all those video views? Well, they just originated it. A user is not doing anything, but the page is just redirecting by itself,” Fou said.

He said the industry focus on detecting bot traffic leaves it vulnerable to attacks such as this, which simply automates the loading of different webpages to generate ad impressions and obscure the origins and nature of the traffic. That element of obfuscation is key because in many cases the traffic that kicks off the cycle of redirects comes from porn sites.

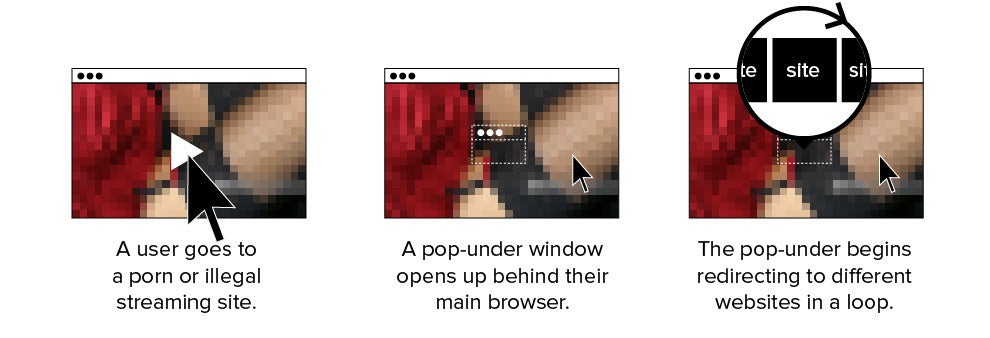

On porn sites, as well as on many illegal streaming and file-sharing sites, it starts with a visitor clicking anywhere on the page. Regardless of what they meant to click, the site clickjacks the action and uses it to open a pop-under window behind the user’s main browser tab.

As the user watches porn or other content in the main window, unscrupulous ad networks use the hidden window to load different websites at timed intervals, racking up views and ad impressions. A user often has no idea this is happening in the background, and in some cases porn sites load the pop-under as an invisible window that can’t be seen. (That window will load websites and ad impressions until the entire browser is closed, or until the computer loses its internet connection.)

This reinforces how porn websites overall are a critical part of the online infrastructure used by ad fraudsters to steal billions of dollars a year from advertisers. Along with generating fraudulent traffic and impressions through these redirect networks, porn sites often show visitors malicious ads that trick them into downloading malware that infects their computer. Once infected, these computers can become part of the botnets used to commit ad fraud.

"A user is not doing anything, but the page is just redirecting by itself."

“If it’s proven in porn, you can go mainstream with it,” Fou said.

This approach of generating automatic redirects using hidden browser windows has long been used by unscrupulous ad networks working with porn publishers, according to Jérôme Segura, the lead malware intelligence analyst for Malwarebytes.

“It’s easy to commit fraud by having those redirections cycle through or load legitimate websites, and it looks like the user has actually visited those sites,” he told BuzzFeed News.

The process of redirecting from one site to the next also helps erase the connection to a porn site, according to Fou.

“The point of redirects is to launder the traffic so that by time it hits the page you can’t tell it came from porn,” he said, noting that mainstream advertisers do not want to be on porn sites, or put in front of users browsing them.

At the heart of these redirect schemes are domains that direct the traffic from one participating site to the next while a pop-under window is open. The amount of traffic they can generate is astounding. Examples include rarbg.to (1.6 billion visits in the last 12 months, according to SimilarWeb), redirect2719.ws (5,377 million visits since launching in April), fedsit.com (812 million visits since launching in April), and u1trkqf.com (434 million visits since launching in July). Some redirect domains have homepage text that describes them as ad servers operated by ad networks, but many have no content and their ownership information is hidden in domain records.

“These are sites that come out of nowhere — there’s no content, and humans will not type in this alphanumeric [domain name] — and this traffic is made up through redirects,” Fou told BuzzFeed News, emphasizing that redirecting traffic to webpages is different from serving ads.

Because this method of generating traffic does not involve bots, it’s difficult for verification companies to recognize that the traffic is fraudulent, he said.

“It’s technically not bots, as in a fake browser hitting a page,” he said. “A lot of this is going to show up [in website analytics reports] as direct or referral traffic.”

DingIt and 86 esports highlight sites

It was the referral traffic going to DingIt that caught the attention of both DoubleVerify and Fou.

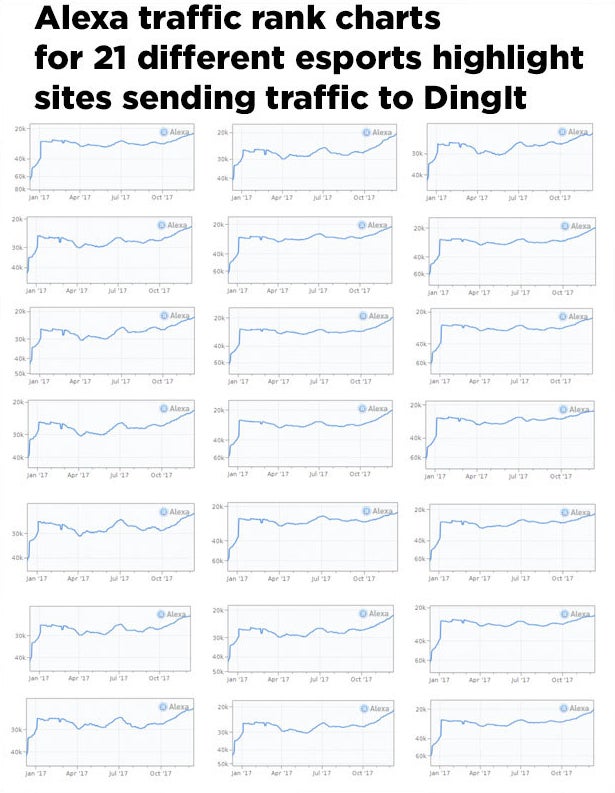

Just over a year ago DingIt began receiving traffic from a new network of more than 80 websites that, like DingIt, feature video clips from video game tournaments and other esports content. The sites have names such as dotahighlight.info, leaguehighlight.org, hearthstonehighlight.com, and leagueoflegendshighlight.com. They use the same design templates, and often feature the same content on their homepages. Domain registration records show that 74 of them were registered on the exact same day, Sept. 17, 2016.

Data from SimilarWeb and Alexa shows that these sites constantly redirect traffic among themselves before a significant portion of it ends up on one site: DingIt.

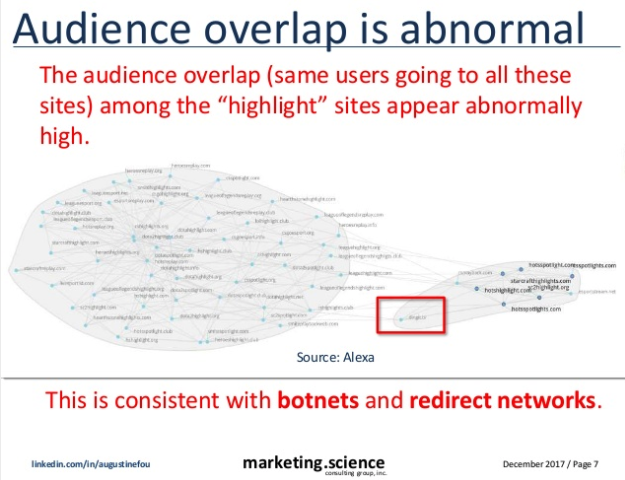

This slide from Fou's presentation uses a graph generated by Alexa — an analytics service that DingIt itself uses to measure its traffic — to show how traffic is being redirected among the esports highlight sites and DingIt in two tight networks:

Data from Alexa and SimilarWeb show that the highlight sites share traffic patterns. Fou said this is a telltale sign of redirect domains because they are passing the same traffic among themselves and therefore have the same audience and traffic at roughly the same time. (Identical traffic patterns were also present in a previous BuzzFeed News investigation into a redirect scheme.)

In order to kick off the chain of redirects, these esports highlight sites receive paid traffic generated from pop-unders on porn, file sharing, and illegal streaming sites, including Txxx.com, Kissanime.ru, and Openload.co, according to data from SimilarWeb.

The combination of the pop-under traffic and the redirect network ensures that by the time the traffic makes its way to DingIt it appears to analytics software and, most importantly, to verification companies as human traffic coming from esports sites.

“A significant portion of the traffic on DingIt.tv involved invalid traffic practices, including but not limited to low quality traffic sourced from auto redirects, pop-ups from adult/streaming/torrent sites, and malware,” according to the report produced by DoubleVerify’s ad fraud lab. "Moreover, when traffic is driven to DingIt.tv through one of these sources, the ads and videos auto-play without any user interaction.”

DoubleVerify found that DingIt uses a system that only engages the autoplay behavior when the invalid traffic is directed. This keeps an average user who navigates to DingIt on their own from seeing videos, and ads, displayed this way.

“If a user does innocently navigate directly to the site, that user will not see this forced redirect behavior, as it has to be triggered by the appropriate entry page from the redirect chains,” the report said.

When first contacted by BuzzFeed News, Adam Simmons, DingIt’s vice president of content and marketing, offered a lengthy reply to explain the ways in which DingIt analyzes traffic coming to its site to ensure it’s not fraudulent.

“As we are a premium ad-funded business, the balance for us is maximising our audience while still making sure that audience is human, engaged and high quality,” he said in an email. “In an ideal world (and internet ecosystem), all traffic would be perfect. Unfortunately, there are a minority of bad actors within the ecosystem who use grey or black hat methods to source traffic on behalf of publishers or their partners. This low quality traffic is an issue we deal with every day and have invested significant resources into blocking.”

"This low quality traffic is an issue we deal with every day and have invested significant resources into blocking.”

He said the company works with four verification services to ensure the site does not monetize fraudulent traffic, though he declined to name the partners.

“We filter traffic on a per session basis to block low quality or IVT [invalid traffic]. This approach results in our advertisers receiving less than 2% IVT as measured by third party verification tools,” he said.

Simmons said DingIt uses an internal system to automatically prevent DingIt video content from playing in a pop-up window. He also questioned the accuracy of the data found in SimilarWeb and Alexa, saying that it could be counting impressions or traffic that were blocked by the site’s verification tools.

BuzzFeed News asked Simmons if DingIt pays the network of esports highlight sites to send traffic. “We do not pay those sites for traffic,” he said.

Simmons was less clear when asked about the connection between DingIt and the sites, and whether they provided traffic based on a relationship with a partner or other entity.

“If we have a commercial relationship, we can identify the original purchaser. However, it can be challenging to identify the root source of traffic where there are sites or individuals with indirect incentives to promote our content,” he said.

Contrary to what Simmons said, there are not “indirect” incentives for the esports redirect sites to send traffic to DingIt. Eighty-four of those sites have private domain ownership information. Of the two that are public, one was registered in 2014 to OC Shield Technologies Ltd — the original parent company of DingIt.

Mark Hain, whose email is associated with the domain registration, is the founder of OC Shield Technologies Limited. He has also served as CEO of DingIt, and was listed as its founder in a 2015 article about the company raising $1.5 million in venture capital.

The other public owner of an esports redirect domain is Peer Visser the commercial director of OC Shield Ltd, a related company where Hain also worked. Visser's site, Csrunhighlight.com, was registered the same day in September as the vast majority of the other highlight sites. Both OC Shield Ltd and OC Shield Technologies were shareholders in Dingit upon its incorporation in 2014, and Visser was a director of the company.

It’s unclear what Haim’s current role is with DingIt, as he opened but did not reply to two emails from BuzzFeed News. Visser opened but did not reply to an email.

As for a commercial relationship, as Simmons put it, OC Shield Technologies Limited earned close to £300,000 in consulting fees from DingIt in 2016, according to accounts filed by DingIt with the UK Companies House. It’s unclear if these fees are related to the redirect sites, as Haim and Visser did not respond to BuzzFeed News.

After initially exchanging a series of emails with BuzzFeed News, Simmons stopped replying to questions after being informed of the connection between Haim, Visser, OC Shield, DingIt, and the redirect sites. Simmons also didn’t reply to a subsequent email that shared the findings from DoubleVerify’s investigation into DingIt, and its classification of the site as fraudulent.

This isn’t the first time DingIt’s traffic-buying practices have come under scrutiny. On Sept. 20, 2016, a UK esports news site reported that DingIt was buying 20% of its traffic. At the time, SimilarWeb showed the site was receiving significant referral traffic directly from streaming and file-sharing websites that use pop-unders, as well as from redirect domains operated by ad networks.

“Ding It touts ‘amazing’ growth but purchases a chunk of traffic through ads,” read the headline on the article by Slingshot.

“Pay one of these folk some cash and your page views will increase rapidly. But those readers probably won’t even be aware their browser visited your site, let alone return of their own accord in the future,” Phillip Hallam-Baker, VP and principal scientist at Comodo Inc., said in the article.

Within 10 days of that story appearing, the group of newly registered esports redirect sites was suddenly the single biggest source of referral traffic to DingIt, and has remained so ever since.

Ad networks are part of the problem

The use of pop-ups and pop-unders, and of redirect networks, to generate traffic for more mainstream publishers like Dingit is growing, and it’s causing major ad networks to address the issue.

This summer, Google published a blog post to highlight an upgraded policy that bans any and all use of these techniques by publishers looking to be part of its AdSense display ad network.

“To simplify our policies, we are no longer permitting the placement of Google ads on pages that are loaded as a pop-up or pop-under. Additionally, we do not permit Google ads on any site that contains or triggers pop-unders, regardless of whether Google ads are shown in the pop-unders,” the blog post said.

A Google FAQ also says that “sites using AdSense may not be loaded by any software that triggers pop-ups, modifies browser settings, redirects users to unwanted sites, or otherwise interferes with normal site navigation.”

Mike Zaneis, the CEO of the Trustworthy Accountability Group, an ad industry initiative to fight fraud, said it’s essential for publishers to disclose where their traffic is coming from. If they are sourcing some or most of it from porn pop-unders or so-called “zero click” traffic providers, they need to let advertisers know.

“Regarding traffic from porn sites, for TAG it's really about the quality of the traffic,” he told BuzzFeed News. “We require all publishers to disclose the percentages of sourced traffic and where that traffic comes from. This new level of transparency allows buyers to know if the traffic should be suspect or potentially unsafe for their brand.”

This kind of disclosure is still rare, and another challenge is that ad networks themselves play a key role in routing porn traffic through redirect domains to help obscure its origins, according to Segura of Malwarebytes. He said in the past he’s investigated malicious pop-ups and pop-unders only to find that the sites being loaded were actually owned by the very same ad network that placed the ads.

“The waters are very murky when it comes to these ad networks,” he said.

Segura said the digital advertising industry itself bears some blame for the ubiquitousness of fraud and shady practices targeting brands and consumers.

“The level of sophistication behind [ad] fraud makes it more difficult to catch,” he said. “But at the same time, I do believe a large part of the problem is the business practices of the industry itself.” ●

Read these other BuzzFeed News investigations into ad fraud: