Members of vulnerable groups such as the Latino, Muslim, and Jewish communities are being disproportionately targeted online with disinformation, harassment, and computational propaganda — and they don’t trust big social platforms to help them, according to new research by the Palo Alto–based Institute for the Future’s Digital Intelligence Lab shared exclusively with BuzzFeed News.

Researchers found that online messages and images on platforms such as Twitter that originate in the Latino, Muslim, and Jewish communities are co-opted by extremists to spread division and disinformation, often resulting in more social media engagement for the extremists. This causes members of social groups to pull away from online conversations and platforms, and to stop using them to engage and organize, further ceding ground to trolls and extremists.

“We think that the general goal of this [activity] is to create a spiral of silence to prevent people from participating in politics online, or to prevent them from using these platforms to organize or communicate,” said Samuel Woolley, the director of the Digital Intelligence Lab. The platforms, meanwhile, have mainly met these complaints with inaction, according to the research.

Woolley said he expects strategies like fomenting division, spreading disinformation, and co-opting narratives that were used by bad actors in the 2016 election to be employed in the upcoming 2020 election. “In 2020 what we hypothesize is that social groups, religious groups, and issue voting groups will be the primary target of” this kind of activity, he said.

The lab commissioned eight case studies from academics and think tank researchers to look at how different social and issues groups in the US are affected by what researchers call “computational propaganda” (“the assemblage of social media platforms, autonomous agents, and big data tasked with the manipulation of public opinion” — i.e., digital propaganda). The groups studied were Muslim Americans, Latino Americans, moderate Republicans, immigration activists, black women gun owners, environmental activists, anti-abortion and abortion rights activists, and Jewish Americans.

In one example, immigration activists told researchers that a “know your rights” flyer instructing people what to do when stopped by ICE was photoshopped to include false information, and then spread on social media. A member of the Council on American-Islamic Relations said the hashtag related to the organization’s name (#CAIR) has been “taken over by haters” and used to harass Muslims. Researchers who looked at anti-Latino messaging on Reddit also found that extremist voices discussing Latino topics “appear to be louder than their supporters.”

Jewish Americans interviewed by researchers said online conversations about Israel have reached a new level of toxicity. They spoke of “non-bot Twitter mobs” targeting people, and “coordinated misinformation campaigns conducted by Jewish organizations, trying to propagandize Jews.”

“What we've come to understand is that it's oftentimes the most vulnerable social groups and minority communities that are the targets of computational propaganda,” Woolley told BuzzFeed News.

These findings align with other data that reinforces how these social groups bear the brunt of online harassment. According to a 2019 report from the ADL, 27% of black Americans, 30% of Latinos, 35% of Muslims, and 63% of the LGBTQ+ communities in the United States have been harassed online because of their identity.

Bots

While bots were generally not a dominant presence in the Twitter conversations analyzed by researchers, automated accounts were used to spread hateful or harassing messages to different communities.

Tweets gathered about the Arizona Republican primary to replace John McCain in the Senate and his funeral last year showed that bots tried to direct moderate Republicans to america-hijacked.com, an anti-Semitic conspiracy website. (It has not published new material since 2017.) Researchers also found that Twitter discussions about reproductive rights saw anti-abortion bots spread harassing language, while pro–abortion rights bots spread politically divisive messages.

Researchers used the Botometer tool to identify likely automated accounts, and gathered millions of tweets based on hashtags for analysis. They combined this data analysis with interviews conducted with members of the communities being studied. The goal was to identify and quantify the human consequences of computational propaganda, according to Woolley.

“The results range from chilling effects and disenfranchisement to psychological and physical harm,” reads an executive summary from Woolley and Katie Joseff, the lab’s research director.

Joseff said people in the studied communities feel they’re being targeted and outmaneuvered by extremist groups and that they don’t “have the allyship of the platforms.”

“They didn't trust the platforms to help them,” she said.

In response to a request for comment, a Twitter spokesperson pointed to the company's review of its efforts to protect election integrity during the 2018 midterms elections.

"With elections taking place around the globe leading up to 2020, we continue to build on our efforts to address the threats posed by hostile foreign and domestic actors. We're working to foster an environment conducive to healthy, meaningful conversations on our service," said an emailed statement from the spokesperson. (Reddit, the other social platform studied in the research, did not immediately reply to a request for comment.)

Joseff and Woolley said more extreme and insular social media platforms like Gab and 8Chan are where harassment campaigns and messaging about certain social groups is incubated. Ideas that begin on these platforms later dictate the conversation that takes place on more mainstream social media platforms.

“The niche platforms like Gab or 8Chan are spaces where the culture around this kind of language becomes fermented and is built,” Woolley said. “That’s why you’re seeing the cross-pollination of attacks across more mainstream social media platforms … directed at multiple different types of groups.”

Co-opting

Researchers found that several of the communities studied are dealing with hashtag and content co-opting, a process by which something used by a group to promote a message or cause gets turned on its head and exploited by opponents.

For example, immigration activists interviewed for one case study said they’ve seen anti-immigration campaigns “video-taping activists and portraying them as ICE officers online, and reframing images to represent immigrant organizations as white supremacist supporters.”

Those interviewed said the perpetrators are tech savvy, “use social media to track and disrupt activism events, and have created memes of minorities looting after a natural disaster.”

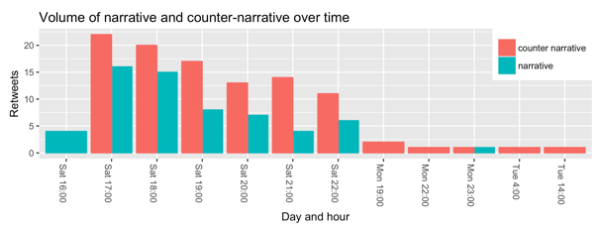

The researchers found that messages initially pushed out by immigration activists were consistently co-opted by their opponents — and that these counter-narrative messages generate more engagement than the original, as shown in this graphic representing one example:

“In all cases but one a narrative was consistently drowned out by a counter narrative,” the researchers wrote.

Another case study about Latino Americans gathered data from Reddit. It found that members of r/The_Donald, a major pro-Trump subreddit where racist and extremist content often surfaces, were hugely influential in organizing and promoting discussions related to the Latino community. By filling Reddit with their content, as well as organizing megathreads and other group discussions, they drowned out Latino voices. Researchers also wrote that trolls have at times impersonated experts “in attempts to sow discord and false narratives” related to Latino issues.

Old Tropes

The specific disinformation identified by researchers was often connected to long-running conspiracies or false claims. The case studies about online conversations about women’s reproductive rights and climate science found that old tropes and falsehoods continue to drive divisive conversations.

In the case of women’s reproductive rights, researchers studied 1.7 million tweets posted between Aug. 27 and Sept. 7 last year to coincide with the timing of the Kavanaugh confirmation hearing. The two most prominent disinformation campaigns identified were false claims about Planned Parenthood. One false claim was that the founder of the organization started it to target black people for abortions. This is based on a deliberate misquote of what Margaret Sanger actually said, which was in fact to warn against people thinking the organization was targeting black Americans.

“Recurrence of age-old conspiracies or tropes occurred across many of the case studies,” Joseff said.

Key to the spread of hate, division, and disinformation online is inaction from social media companies. Many of those interviewed for the studies said that when a harassment campaign is underway they have nowhere to turn, and the tech giants don’t take any action.

“There is just so much, it can't be a full-time job,” the director of a chapter of CAIR told researchers when asked about muting or blocking those who send hateful messages.

When platforms do take action, they sometimes end up banning the wrong people. One interview subject who participates in online activism related to immigration issues said that Twitter removed the account of a key Black Lives Matter march organizer last June.

“Subsequently the march was sent into disarray and could have been avoided would major voices of social rights activist organizers have been present in the conversation,” the researchers wrote.

The case studies also identified the fact that algorithms and other key elements of how social media platforms work are easily co-opted by bad actors.

“Their algorithms are gameable and can result in biased or hateful trends,” the executive summary said. “What spreads on their platforms can result in very real offline political violence, let alone the psychological impact from online trolling and harassment.” ●