Twitter will soon begin removing altered videos and other media that it believes threatens people’s safety, risks mass violence, or could cause people to not vote. It will also start labeling significantly altered media, no matter the intent.

The company announced the new rule Tuesday. It will go into effect March 5.

“You may not deceptively share synthetic or manipulated media that are likely to cause harm,” Twitter said in a blog post. “In addition, we may label Tweets containing synthetic and manipulated media to help people understand the media’s authenticity and to provide additional context.”

Twitter's new policy arrives amid growing worries that deepfakes and other manipulated media could have an impact on the 2020 election and beyond. Last May, Facebook came under fire for keeping up a slowed-down video of House Speaker Nancy Pelosi, which was meant to make her look intoxicated, and not clearly saying it was altered. Pelosi recently called Facebook a “shameful” company. Under its new policy, Twitter would have labeled the Pelosi video as altered, said Yoel Roth, the company’s head of site integrity.

“Part of our job is to closely monitor all sorts of emerging issues and behaviors to protect people on Twitter,” Del Harvey, Twitter's vice president of trust and safety, said on a Tuesday call with reporters. “Our goal was really to provide people with more context around certain types of media they come across on Twitter and to ensure they’re able to make informed decisions around what they’re seeing.”

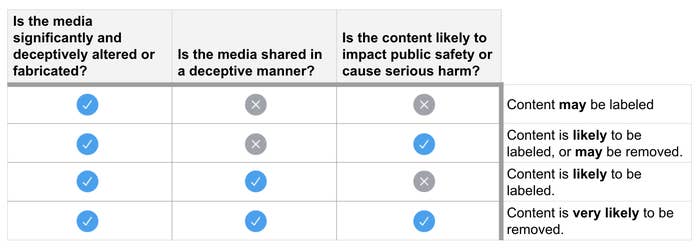

When Twitter finds media that is altered, it might take a series of actions: label the tweet as misleading, reduce its visibility by removing it from algorithmic recommendation engines, provide additional context, and show a warning before people retweet it. For those videos and other media the social network deems capable of causing harm, it might remove them altogether.

People are less likely to share misinformation when they’re forced to stop and think before hitting the share button, as BuzzFeed News has reported. So by putting some form of warning with the retweet button, Twitter is likely to reduce some of these altered videos’ virality.

Harvey said she wants people to at least know what they’re sharing before they retweet, giving them the ability to make a fully informed decision to share or add additional context. “If somebody doesn’t know that what they’re about to try to share on Twitter is altered, or they’re unaware of that, we want to try to give them that information so that they have the ability to make sure that they’re tweeting what they want to with it,” she said.

Once the rule goes into effect, Twitter will again have to make a series of judgment calls over what constitutes “harm,” what risks “mass violence,” and what is fake enough to be considered “significantly and deceptively altered or fabricated.”

If Twitter’s past behavior is any indication, it will be slow to take action. Last July, the company said it would append a label to tweets from public figures that break its rules. Despite many tests, it has yet to put the label into action.